3.2.3 Hadoop Jobs

3.2.3.1 Hadoop Computing Cluster

Hadoop computing cluster is a new computing system based Hadoop and optimized for High Energy physics computing. It has high reliability, high efficiency, high scalability, high fault tolerance and low cost.

3.2.3.2 Job submission Instructions

The job submission is divided into account application, user login, job option file preparation and job submission.

1) Account application

(1) Apply AFS account

AFS account aplication link is: http://afsapply.ihep.ac.cn/ccapply/userapplyaction.action.

If you already have afs account, ignore this and forward to step (2).

(2) Apply Hadoop account

Send email to admistration, email address: huangql@ihep.ac.cn

2) User login

The available login farm: ybjslc.ihep.ac.cn, more details listed in 3.1

3) Prepare job Option file

Before you submit jobs, you have to prepare job option file. The job option file contains six parts:

(1) JobType: job type. For example: cosmic rays simulation(corsika), detector simulation(Geant 4) and reconstruction job(medea++).

(2) InputFile/InputPath: Input file or directory. The input data files are required to be stored in /hdfs.

(3) OutputPath: Output file or directory. If output to HDFS, the directory begins with /hdfs. Otherwise output to other mount points like /scratcfs. At the same time, you need to explain the prefix and extension of the output file name.

(4) Job Environment settings: Job execution environment.

(5) Executable commands: Exectutable commands, which are the job running procedures and parameters.

(6) LogOutputDir: The log directory.

jobOptionFile example 1:

//JobType

JobType=Geant4

//InputFile/InputPath

Hadoop_InputDir=/hdfs/home/cc/huangql/test/corsika-74005-2/

//OutputPath

Hadoop_OutputDir=/hdfs/home/cc/huangql/test/G4asg-3/

Name_Ext=.asg

//Job Environment settings

Eventstart=0

Eventend=5000

source /afs/ihep.ac.cn/users/y/ybjx/anysw/slc5_ia64_gcc41/external/envc.sh

export G4WORKDIR=/workfs/cc/huangql/v0-21Sep15

export PATH=${PATH}:${G4WORKDIR}/bin/${G4SYSTEM}

//executable commands

cat ${Hadoop_InputDir} | /workfs/cc/huangql/v0-21Sep15/bin/Linux-g++/G4asg -output $Hadoop_OutputDir -setting $G4WORKDIR/config/settingybj.db -SDLocation $G4WORKDIR/config/ED25.loc -MDLocation $G4WORKDIR/config/MD16.loc -geom $G4WORKDIR/config/geometry.db ## -nEventEnd $Eventend

//LogOutputDir

Log_Dir=/home/cc/huangql/hadoop/test/logs/

jobOptionFile example 2:

//OutputPath

Hadoop_OutputDir=/hdfs/user/huangql/corsika-74005-4/

//Output File Name Prefix

Name_Prefix={"DAT","DAT"}

//Output File Name Extend

Name_Ext={"",".long"}

// set environment

//source setup.sh

// I start number

I=300243

//cycle index

Times=32

// execute progrom

SD1=`expr 33746 + $I`

SD2=`expr 13338 + $I`

SD3=`expr 54923 + $I`

echo $SD1

echo $SD2

echo $SD3

cd /home/cc/huangql/hadoop/corsika-74005/run

rm -rf $Hadoop_OutputDir/DAT*${I}*

//executable commands

./corsika74005Linux_QGSJET_gheisha<<EOF

RUNNR $I run number

EVTNR 1 number of first shower event

NSHOW 50 number of showers to generate

PRMPAR 14 particle type of prim. particle

ESLOPE -2.7 slope of primary energy spectrum

ERANGE 100.E3 100.E3 energy range of primary particle

THETAP 0 0 range of zenith angle (degree)

PHIP 45 45 range of azimuth angle (degree)

SEED $SD1 0 0 seed for 1. random number sequence

SEED $SD2 0 0 seed for 2. random number sequence

SEED $SD3 0 0

OBSLEV 4300.0E2 observation level (in cm)

FIXCHI 0. starting altitude (g/cm**2)

MAGNET 34.5 35.0 magnetic field centr. Europe

HADFLG 0 0 0 0 0 2 flags hadr.interact.&fragmentation

QGSJET T 0 use QGSJET for high energy hadrons

QGSSIG T use QGSJET hadronic cross sections

ECUTS 0.05 0.05 0.001 0.001 energy cuts:hadr. muon elec. phot.

MUADDI F additional info for muons

MUMULT T muon multiple scattering angle

ELMFLG F T em. interaction flags (NKG,EGS)

MUMULT T muon multiple scattering angle

ELMFLG F T em. interaction flags (NKG,EGS)

STEPFC 1.0 mult. scattering step length fact.

RADNKG 200.E2 outer radius for NKG lat.dens.distr.

ARRANG 0. rotation of array to north

LONGI T 10. T T longit.distr. & step size & fit & out

ECTMAP 1.E2 cut on gamma factor for printout

MAXPRT 1000 max. number of printed events

DATBAS F write .dbase file

DIRECT $Hadoop_OutputDir/ output directory

USER you user

DEBUG F 6 F 1000000 debug flag and log.unit for out

EXIT terminates input

~

EOF

//LogOutputDir

Log_Dir=/home/cc/huangql/hadoop/logs/

4) Job submission

The command to submit jobs:

hsub queue jobType jobOptionFile jobname

jobType: MC(simulation job), REC(reconstruction job), DA(analysis job)

queue: Queue name(ybj, default)

jobOptionFile: Job option file

jobname: Job name

5) Job management

(1) Query all jobs

mapred job -list all

(2) Query running jobs

mapred job -list

(3) View job status

mapred job -status jobId

(4) Kill job

mapred job -kill jobId

(5) Kill task

mapred job -kill-task taskId

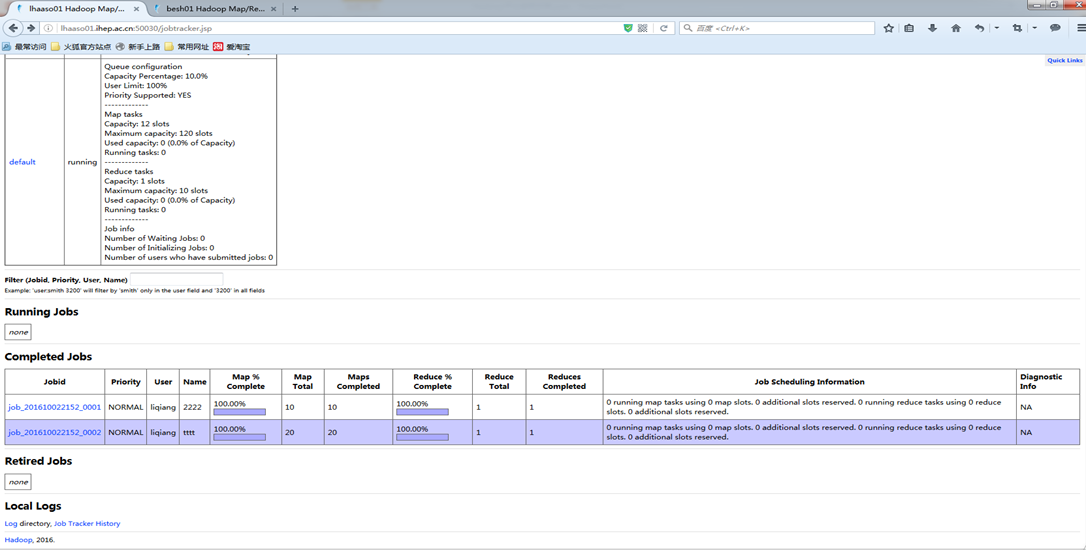

6) Job monitoring

Access the link: http://lhaaso01.ihep.ac.cn:50030/jobtracker.jsp to view job status and log file.

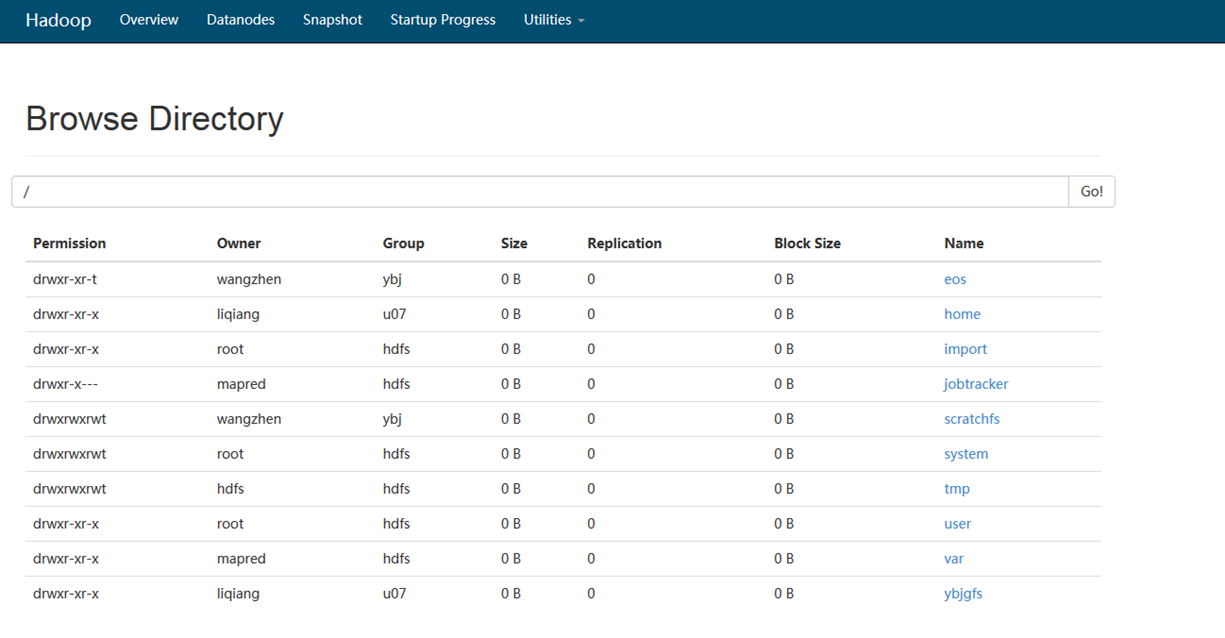

7) HDFS File management

HDFS monitoring: http://lhaaso01.ihep.ac.cn:50070/

(1) Web browser

(2) Commands

a. list files

hadoop fs -ls /

b. create directory

hadoop fs -mkdir /tmp/input

c. upload file

hadoop fs –put/ -copyFromLocal file destdir

d. download file

hadoop fs –get/ -copyToLocal file destdir

e. read file

hadoop fs -cat file

hadoop fs -tail file

f. delete file

hadoop fs –rm -r file

g. delete directory

hadoop fs –rm -r dir

h. modify permission

hadoop fs –chmod

hadoop fs –chown

i. Disk usage

hadoop fs –du –h dir